Modeling a model

All machine learning methods (including artificial intelligence, a subcategory) share the fundamental idea of fitting a function to a set of input data, then using that function to make new decisions based on that input data.

This includes, of course, generative AI (which, I would argue, is more of machine learning than AI). The training data is huge quantities of either text or image data, and the output is a text or image. This output is usually orders of magnitude more complex than what is colloquially known as “machine learning”, which outputs numbers or labels or less complicated things. That said, on a fundamental level they’re no different. So for ease of discussion, let’s use a much simpler model.

That's right, it's good old 'trend line' from excel.

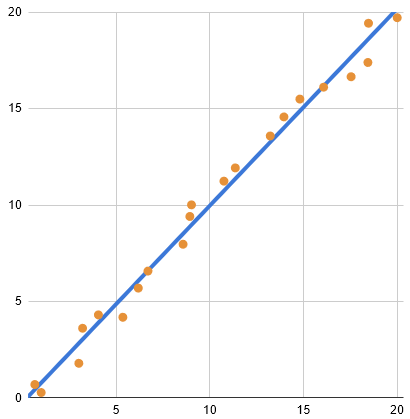

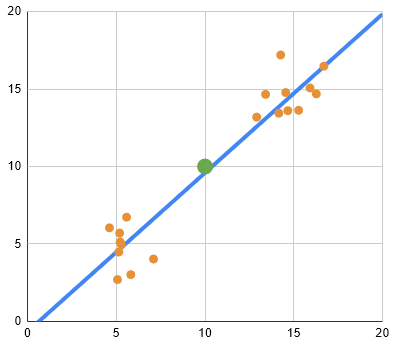

In this machine learning exercise, we're given these orange dots as our training data. To model this data, we've picked a line, and now we have to "train" the model so that the slope and intercept is as close to the training data is possible. Because the model is so simple, excel can actually solve for these values via analytical methods, bypassing the longer iterative training processes necessary for more complicated models.

So we've done it. We've made a machine learn. We've artifaced some intelligence. We can now ask our very simple model to "generate" outputs by giving it an x, and it will give us a plausible (according to the training data) y. This is even easier because I hand generated the training data by picking some values along x = y and then adding a bit of gaussian noise.

Clearly a 500 word response to "what was the cause of WWI" is considerably more complicated than fitting lines to a set of points picked to be simple to fit a line to, but again, the underlying methodology is the same. The difference is that the 500 word essay belongs to a much, MUCH higher dimension space (so points not on a plane or on a cube or a hypercube but a hyper-hyper-hyper...cube), and the model needs to have waaaay more parameters than just slope+intercept to have a hope of describing it. But the idea is the same. We took a ton of data points, tried our best to simplify it down into a trillion or so parameters (the size of the latest large language models), and now when we give it an input like "what was the cause of WWI in 500 words", the model consults those parameters and produces something as similar as possible to the data it was trained on.

Overfitting

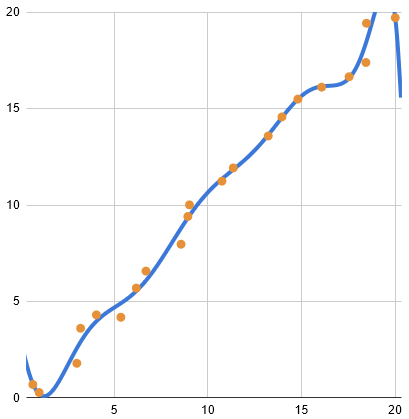

Part of the art of machine learning (vs the science) is picking the right model for your data. For example, a linear model was the right model for our data in the example above. The reason that we know this is because I generated the model according to a linear process and then added noise. But in real applications, it's often not clear what the right way to model your data is. One way to get it wrong (among many) is to pick a model that has too many parameters. For example, technically this model is a better match to our training data, but...

So while it might fit our training data better, if we asked the model to generate for us something at x=19 range we'd probably get something very strange. Our old model, even though it was trained on values for 0-20, would also work reliably all the way to infinity. This one would be very, very wrong.

My argument about creativity actually has nothing to do with overfitting at all. I'm just mentioning it to make it clear that that's not what I'm talking about. A large language model overfitting is probably akin to regurgitating the training data, or maybe the "there is no country in Africa that starts with k" debacle (as in, the exact verbiage of 'no country in Africa' overrides the learned relationships between set of countries in Africa and their spelling).

While overfitting certainly doesn't help with creativity, overfitting is fundamentally a machine learning error, the same way that a chair's leg falling off when you sit on it is. If a model overfits, it is not well-made.

My argument is that even well-made models will never be creative.

Creativity

Creativity is an odd concept to consider with respect to machine learning. Up until, you know, 2022, they had very little to do with each other. But when generative AI happened and deep learning turned its gaze upon writing and art, suddenly the domains collided.

So now it's a very valid question, will machine generated art and writing be creative? At the very least they are intruding upon the domain of creative work, and many people talk about the possibility of it. So, in machine learning terms, what is creativity?

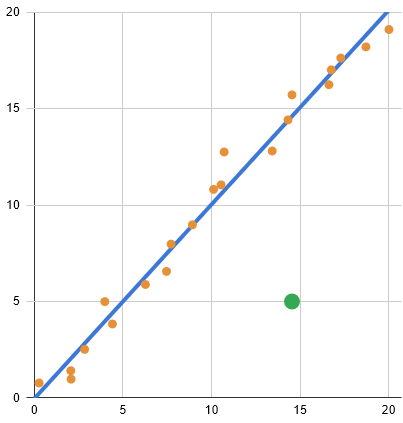

In my opinion, creativity looks like this:

To be creative is to make something heretofore unseen. In our toy example, the orange dots represent the existing body of media: art, novels, short stories, movies, the domain of your choice. To contribute creatively that domain is intentionally and thoughtfully diverge from that body of work. And it's fundamentally at odds at how machine learning works. The whole point of training a machine learning model is so that it won't output (15, 5). A well-trained model is not creative. A creative model is a malfunctioning model.

Pseudo-creativity

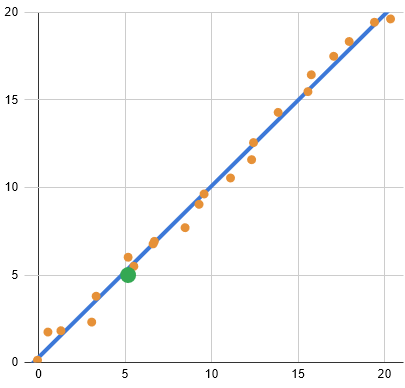

Admittedly this is a very aggressive definition of creativity. After all, isn't this also creative?

The point (5.5, 5.5) wasn't present in the original data set, yet it's something our x = y model could feasibly output. In fact, there are an infinite number of creative things our model could output. But, would you enjoy reading the same book again with the character's names changed? Would you watch a movie with the same plot points but in a different setting? These on-model creative works are probably interesting and entertaining, but after a couple of them, you'd probably start to pick up on the common tropes, the common patterns. You'd probably start to guess what happens next. You'd probably start to feel bored.

This is a problem people run into when they make procedurally generated games. If your gameplay loop isn't solid, eventually players pick up on the commonalities between the levels and things start to feel same-y (see: No Man's Sky at release, Starbound). There's an art and a practice to designing good procedural generation. It's one of those things that even big game studios get wrong on the regular. It remains to be seen whether a machine learning model can learn the trick.

The spaces between

Another potential avenue for machine creativity is as follows:

The media analogy here might be two sets of genres, maybe samurai movies in one and space operas in the other. The middle point here would the idea to combine elements of both. Maybe like having flashy sword battles, but set in space. And it's definitely within the potential outputs of our well-trained model.

Unfortunately though, there's a real argument to be made here that this isn't the right model (which is two centroids, one at (5, 5) and another at (15, 15), which is how I generated these data points after all). It's not possible to represent the correct model with excel's linear regression or any of their polynomial ones either, but with over a trillion parameters, I'd bet you that the latest chat GPT could find a way to represent this relationship. And then our lovely (10, 10) laser-samurais-on-battleships would never come to be.

Once again, our innovative creation was a fluke of bad machine learning, and as the models get better and better and the number of parameters go up and the more relationships they're capable of encoding, the less likely they to make these kinds of errors, aka these kinds of creativity.

Creative prompting

But generative AI has made creative things, has it not? After all, in the above example, you could ask it to mash up a samurai movie and a space opera and it would. Is that not creative?

It is, but it wasn't the model that was creative, it was you.

And just how you made the creative choice to put these two disparate things together, so is every choice in every creative endeavor. First maybe you come up with a concept. Then you have to come up with a character. What's their name? What do they care about? How are you going to introduce them to your readers? What is the first line of your book? What is the first word?

Every single decision is a creative decision, and at every point, you, as the creator, are making an intentional (even if subconscious) choice to either follow form or break it. And when you put all those choices together, you get something that is wholly, uniquely reflective of you.

But if you let the machine decide, it is always going to choose to follow. And that infinite fractal of creativity gets cut short the second you stop caring. That opportunity to manifest something that is reflective of who you are as an individual comes to an end. And it really is a pity.

Who needs creativity?

Not every piece of art has to push the bounds of creativity. Sometimes you wanna just sit back and relax and watch something that makes you feel good. Sometimes something derivative with the barest touch of novelty is all need. Even if generative AI is never quite able to manage full-on creativity, maybe it can fulfill that need.

The danger is here is complacency.

Because while not everyone wants something new all of the time, eventually everyone does. And if you've drowned out the media landscape in cheap derivatives, if you've removed the ways for creative people to feed and house themselves, our cultural ecosystem will wither and die, and all we'll have are machine-generated art, playing off itself in endless riffs, but never quite managing to make something new.

And here's the trick: the complacency is already here. Do you feel it? There's something hollow in Star Wars 7, 8, and 9. The MCU can't quite manage to bottle that lightning again. Why are so there so many brilliant books in trilogies where books 2 and 3 are mediocre? Why does every triple-A game have the same mechanics and game loop?

AI generated content isn't even here yet, but its ideologies and methodologies have been in full force for the last decade. At some point, the gatekeepers of our society's art stopped taking risks: they're looking at the crop of candidates for the next big thing and not evaluating them on their own merits. They're measuring their similarity to the latest known successes, and then aligning them to that model as close as they can. It's not generative AI, but it has it makes the same choices. It has the same shape. It's slower, less efficient, but it's already here.

Generative AI isn't going to replace creativity. It can't.

It already has.